Filtered Vector Search: A Key Technology in RAG Systems

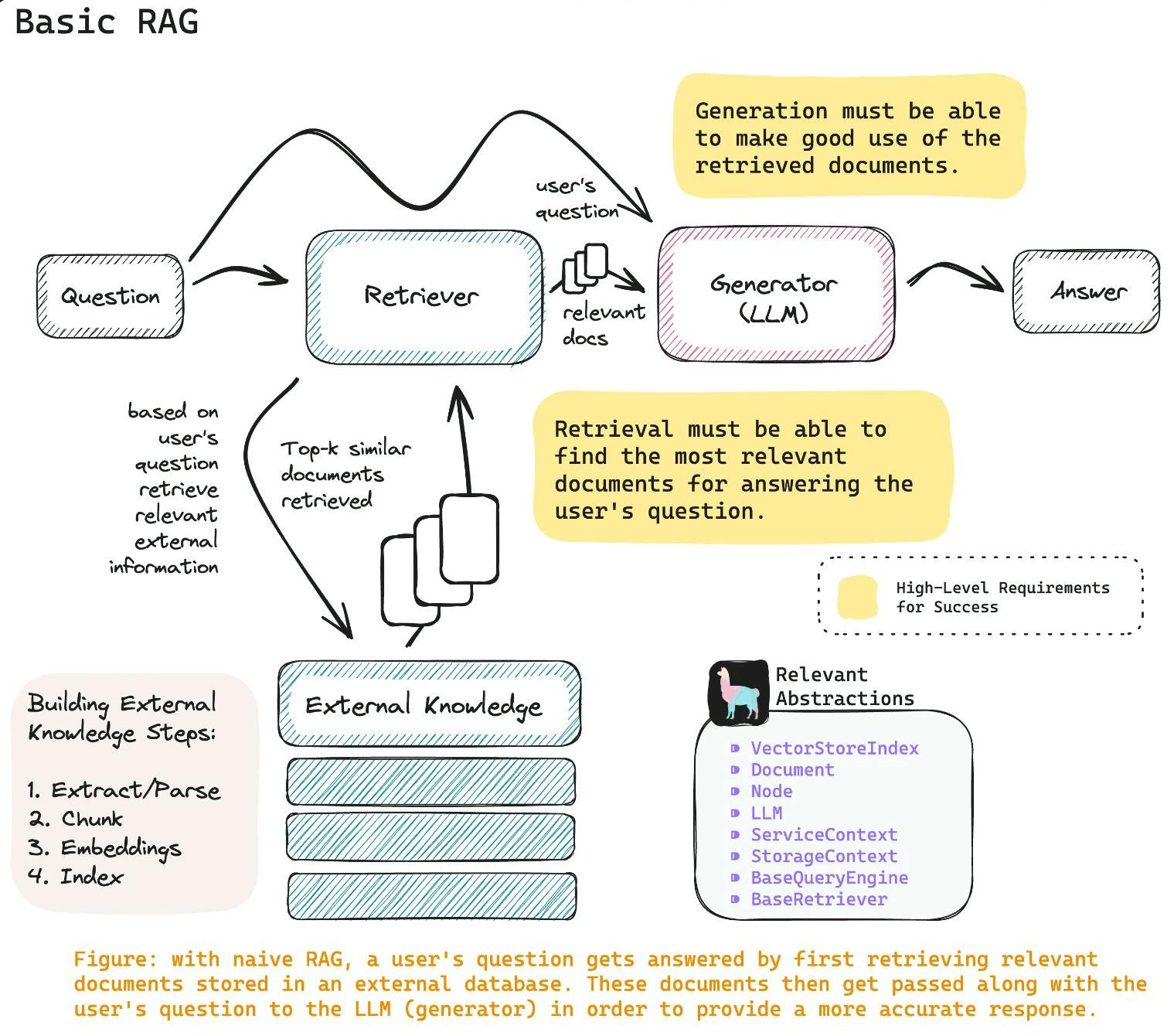

Basically, in an Retrieval-Augmented Generation (RAG) system, documents (including texts, PDFs, Word, PPTs, etc.) are initially processed through chunking to extract embedding vectors, which are then stored and indexed in a vector database. When a user poses a question, the system retrieves the most relevant documents from the index and feeds the user's question along with the relevant document fragments to large language models (LLMs) like ChatGPT to generate more accurate answers.

Source:@jerryjliu0

Several articles have demonstrated the effectiveness of RAG, with the main conclusions being:

RAG significantly improves the results of GenAI applications;

Even if the data in the vector database was known to GPT-4 during its training, GPT-4 using RAG outperforms versions not using RAG;

Some smaller open-source models, when using RAG, can achieve results close to GPT-4 with RAG.

The core of RAG systems is the vector database storing a large number of documents. The ability of this database to quickly and accurately search for documents relevant to a question is crucial to the effectiveness of the RAG system. Vector databases focus not only on vector data but also on corresponding metadata. Making full use of this metadata for filtered searches can significantly improve search accuracy and the overall effectiveness of the RAG system, thereby enhancing the experience of GenAI applications.

For example, if the vector database stores numerous research papers, a user might only be interested in specific disciplines or authors. Therefore, adding corresponding filters during searches can significantly improve the relevance of search results. Additionally, filtered vector searches can be used in multi-tenant scenarios, such as in chat or ChatPDF applications, where users only need to search their own chat logs or documents. In such cases, treating each user as a separate partition in the database could impose a significant burden and negatively impact query performance. Hence, using user IDs as filter criteria for filtered vector searches is a more natural approach.

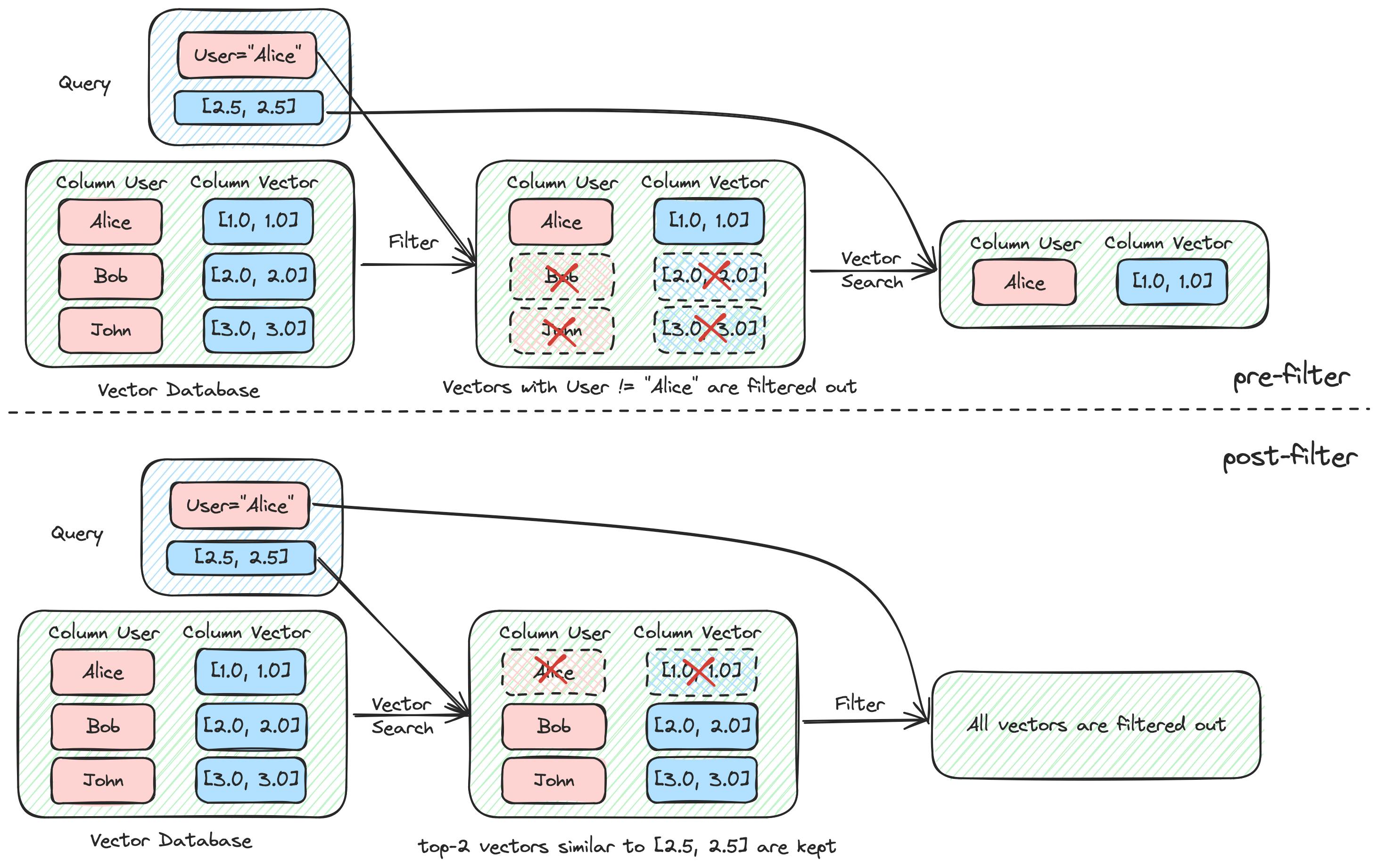

Pre-filtering vs. Post-filtering

There are two approaches to implementing filtered vector searches: pre-filtering and post-filtering. Pre-filtering first uses metadata to select vectors meeting the criteria, then searches within these vectors. The advantage of this method is that if the user needs k most similar documents, the database can guarantee k results. Post-filtering, on the other hand, involves conducting a vector search first to obtain m results, then applying metadata filters to these results. The drawback of this approach is that it's uncertain how many of the m results meet the metadata filtering criteria, so the final result might be fewer than k, especially when the number of vectors meeting the filtering criteria is low in the entire dataset.

The challenge of pre-filtering lies in how to efficiently filter metadata and the search efficiency of vector indices when the number of vectors post-filtering is low. For instance, the widely-used HNSW (Hierarchical Navigable Small World) algorithm sees a significant drop in search effectiveness when the filtering ratio is low (e.g., only 1% of vectors remain after filtering). In response, Qdrant and Weaviate have explored some solutions, typically reverting from the HNSW algorithm to brute-force search when the filtering ratio is very low.

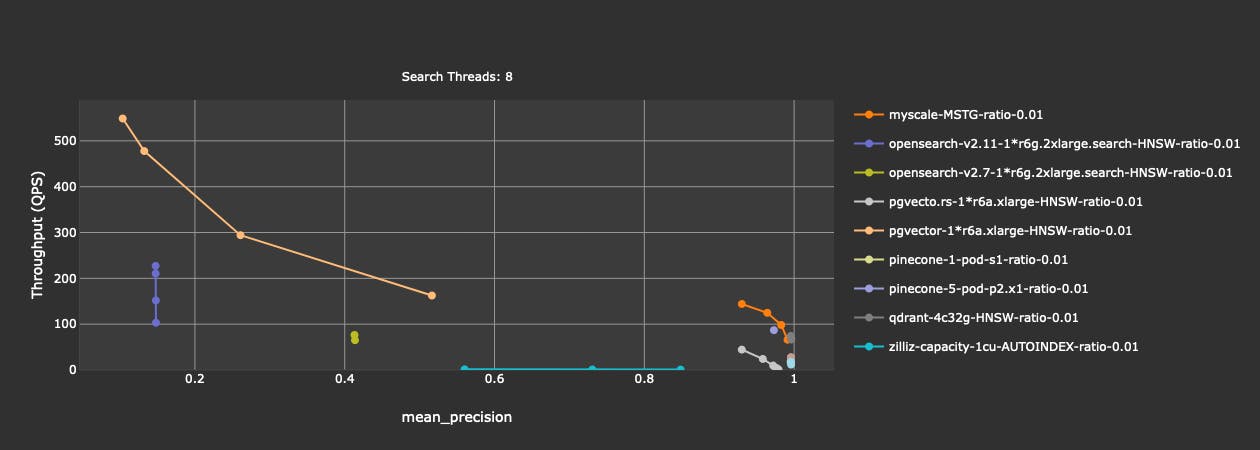

Benchmark Results

Referencing the MyScale Vector Database Benchmark, which tested cloud services of multiple vector databases. In tests with a filtering ratio of 1% (i.e., only 1% of vectors in the entire database meet the filter criteria), the results are as follows:

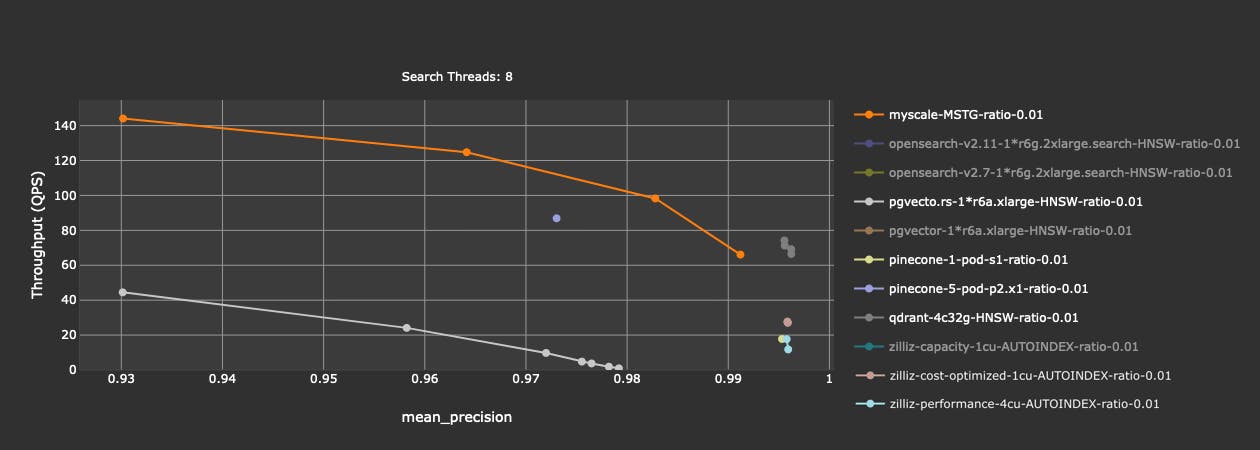

From the results, OpenSearch (versions v2.7 and v2.11) and pgvector show precision below 50%. Zilliz in capacity mode performs poorly, with less than 1 QPS (query per second). Excluding these options, let's look at the remaining results:

It is evident that databases with both good precision and performance include MyScale, Qdrant, and Pinecone (p2 pod). Pgvecto.rs, Zilliz (Performance & Cost-optimized modes), and Pinecone (s1 pod) have decent precision but lower performance. Among these databases, MyScale and Pinecone offer fully managed SaaS services. Qdrant and Zilliz (open-source version known as Milvus) offer both SaaS services and open-source versions. Pgvecto.rs is currently a fully open-source Postgres plugin with no SaaS version.

Conclusion

RAG systems combine LLMs with vector databases, significantly enhancing the effectiveness of GenAI applications through processing user queries and related document fragments. The search efficiency and accuracy of vector databases are key to system performance. Optimizing search strategies (such as pre-filtering and post-filtering) and selecting the right vector database are crucial for enhancing RAG system effectiveness. Comprehensive evaluations of various databases allow users to choose the best solutions for their needs, advancing the GenAI field.

Qin Liu's Blog

Qin Liu's Blog